TL;DR: Run pip-audit in an isolated Docker container before installing updated dependencies on your dev machine. Build a reusable pipauditdocker image and alias pip-audit-proj to test requirements.txt in isolation before they touch your local environment.

In my last article, “Python Supply Chain Security Made Easy” I talked about how to automate pip-audit so you don’t accidentally ship malicious Python packages to production. While there was defense in depth with uv’s delayed installs, there wasn’t much safety beyond that for developers themselves on their machines.

This follow up fixes that so even dev machines stay safe.

Defending your dev machine

My recommendation is instead of installing directly into a local virtual environment and then running pip-audit, create a dedicated Docker image meant for testing dependencies with pip-audit in isolation.

Our workflow can go like this.

First, we update your local dependencies file:

uv pip compile requirements.piptools --output-file requirements.txt --exclude-newer 1 week

This will update the requirements.txt file, or tweak the command to update your uv.lock file, but it don’t install anything.

Second, run a command that uses this new requirements file inside of a temporary docker container to install the requirements and run pip-audit on them.

Third, only if that pip-audit test succeeds, install the updated requirements into your local venv.

uv pip install -r requirements.txt

The pip-audit docker image

What do we use for our Docker testing image? There are of course a million ways to do this. Here’s one optimized for building Python packages that deeply leverages uv’s and pip-audit’s caching to make subsequent runs much, much faster.

Create a Dockerfile with this content:

# Image for installing python packages with uv and testing with pip-audit

# Saved as Dockerfile

FROM ubuntu:latest

RUN apt-get update

RUN apt-get upgrade -y

RUN apt-get autoremove -y

RUN apt-get -y install curl

# Dependencies for building Python packages

RUN apt-get install -y gcc

RUN apt-get install -y build-essential

RUN apt-get install -y clang

RUN apt-get install -y openssl

RUN apt-get install -y checkinstall

RUN apt-get install -y libgdbm-dev

RUN apt-get install -y libc6-dev

RUN apt-get install -y libtool

RUN apt-get install -y zlib1g-dev

RUN apt-get install -y libffi-dev

RUN apt-get install -y libxslt1-dev

ENV PATH=/venv/bin:$PATH

ENV PATH=/root/.cargo/bin:$PATH

ENV PATH=/root/.local/bin/:$PATH

ENV UV_LINK_MODE=copy

# Install uv

RUN curl -LsSf https://astral.sh/uv/install.sh | sh

# set up a virtual env to use for temp dependencies in isolation.

RUN --mount=type=cache,target=/root/.cache uv venv --python 3.14 /venv

# test that uv is working

RUN uv --version

WORKDIR "/"

# Install pip-audit

RUN --mount=type=cache,target=/root/.cache uv pip install --python /venv/bin/python3 pip-audit

This installs a bunch of Linux libraries used for edge-case builds of Python packages. It takes a moment, but you only need to build the image once. Then you’ll run it again and again. If you want to use a newer version of Python later, change the version in uv venv --python 3.14 /venv. Even then on rebuilds, the apt-get steps are reused from cache.

Next you build with a fixed tag so you can create aliases to run using this image:

# In the same folder as the Dockerfile above.

docker build -t pipauditdocker .

Finally, we need to run the container with a few bells and whistles. Add caching via a volume so subsequent runs are very fast: -v pip-audit-cache:/root/.cache. And map a volume so whatever working directory you are in will find the local requirements.txt: -v \"\$(pwd)/requirements.txt:/workspace/requirements.txt:ro\"

Here is the alias to add to your .bashrc or .zshrc accomplishing this:

alias pip-audit-proj="echo '🐳 Launching isolated test env in Docker...' && \

docker run --rm \

-v pip-audit-cache:/root/.cache \

-v \"\$(pwd)/requirements.txt:/workspace/requirements.txt:ro\" \

pipauditdocker \

/bin/bash -c \"echo '📦 Installing requirements from /workspace/requirements.txt...' && \

uv pip install --quiet -r /workspace/requirements.txt && \

echo '🔍 Running pip-audit security scan...' && \

/venv/bin/pip-audit \

--ignore-vuln CVE-2025-53000 \

--ignore-vuln PYSEC-2023-242 \

--skip-editable\""

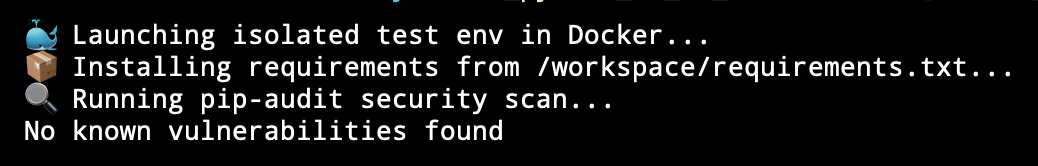

That’s it! Once you reload your shell, all you have to do is type is pip-audit-proj when you’re in the root of your project that contains your requirements.txt file. You should see something like this below. Slow the first time, fast afterwards.

Protecting Docker in production too

Let’s handle one more situation while we are at it. You’re running your Python app IN Docker. Part of the Docker build configures the image and installs your dependencies. We can add a pip-audit check there too:

# Dockerfile for your app (different than validation image above)

# All the steps to copy your app over and configure the image ...

# After creating a venv in /venv and copying your requirements.txt to /app

# Check for any sketchy packages.

# We are using mount rather than a volume because

# we want to cache build time activity, not runtime activity.

RUN --mount=type=cache,target=/root/.cache uv pip install --python /venv/bin/python3 --upgrade pip-audit

RUN --mount=type=cache,target=/root/.cache /venv/bin/pip-audit --ignore-vuln CVE-2025-53000 --ignore-vuln PYSEC-2023-242 --skip-editable

# ENTRYPOINT ... for your app

Conclusion

There you have it. Two birds, one Docker stone for both. Our first Dockerfile built a reusable Docker image named pipauditdocker to run isolated tests against a requirements file. This second one demonstrates how we can make our docker/docker compose build completely fail if there is a bad dependency saving us from letting it slip into production.

Cheers

Michael