TL;DR: Stop building Python from source in Docker (10+ minutes). Use uv venv --python 3.14 /venv to install Python in seconds. Pre-install common packages in a base image and use uv pip install for near-instant builds.

uv has changed the landscape

A lot has been written [1, 2, 3] about uv’s new features allowing it to not just work within Python but to manage Python itself. Many people have written me over email or social media to tell me that this will have a big impact. As a result, I just had Charlie Marsh (creator and lead over at Astral) on Talk Python to dive into the changes and implications.

And all of these conversations got me thinking:

I should really rework my infrastructure workflow to fully leverage uv.

How we run Talk Python

I haven’t written up how we run our devops over at Talk Python. It’s something I’ve been meaning to do as it’s undergone big changes recently.

The short version is that we’ve consolidated all our 6 smaller servers into one properly big machine (8 CPUs) and run 17 multi-tier apps all running within Docker containers using Docker Compose. You can get a sense of some of these apps by looking at our infrastructure status page, which also runs there.

To keep things simple and consistent, we have two Docker images that all our apps we write are based upon. One provides a custom Ubuntu Linux base and on that, we build a custom Python 3 image that has much of the shared tooling configured the same.

The Python 3 container had previously been managing Python by building it from source. And before you tell me there are official Python images, I know. But they don’t have Ubuntu and I’d rather have more complete control over my images. Plus, if I wanted to use another image that doesn’t base itself off a Python image, I’d have to manage it somehow anyway.

Building Python 3.14 from source is slow! It took up to 10 minutes to build that image. Luckily Docker caches it and we’ve only had to rebuild it 4 times this year.

And now a better way

One of the exciting new features of uv is the ability to create a virtual environment based on a specified Python binary that it manages and installs if needed. This takes just several seconds rather than 10 minutes! Here’s the command:

uv venv --python 3.14 /venv

With that capability, we can create a new hierarchy of images:

linux

|

|- python-base # (starting from the uv python command)

|

|- your-app-container

A Dockerfile for python-base might look like this:

FROM linuxbase:latest # our customized Ubuntu

ENV PATH=/venv/bin:$PATH

ENV PATH=/root/.cargo/bin:$PATH

# install uv

RUN curl -LsSf https://astral.sh/uv/install.sh | sh

# set up a virtual env to use for whatever app is destined for this container.

RUN uv venv --python 3.14 /venv

Making it really fly

With a few impure practicalities, we can really make this fast.

For example, if most of our apps are based on Flask and use Granian, then we could add this line to the python-base image:

RUN uv pip install --upgrade flask pydantic beanie

RUN uv pip install --upgrade granian[pname]

# No these packages should not have their versions pinned.

Because uv is SO fast, subsequent installs of common libraries will be instant if you happen to have the same version, which is mostly what happens.

Then be sure to run uv rather than pip for your app level dependencies. For example:

RUN uv pip install -r requirements.txt

# These packages do have their versions pinned, but mostly overlap with latest because I'm not a monster.

A working Flask example

Here’s an example you can play with that runs the “Hello World” Flask app with Granian in Docker:

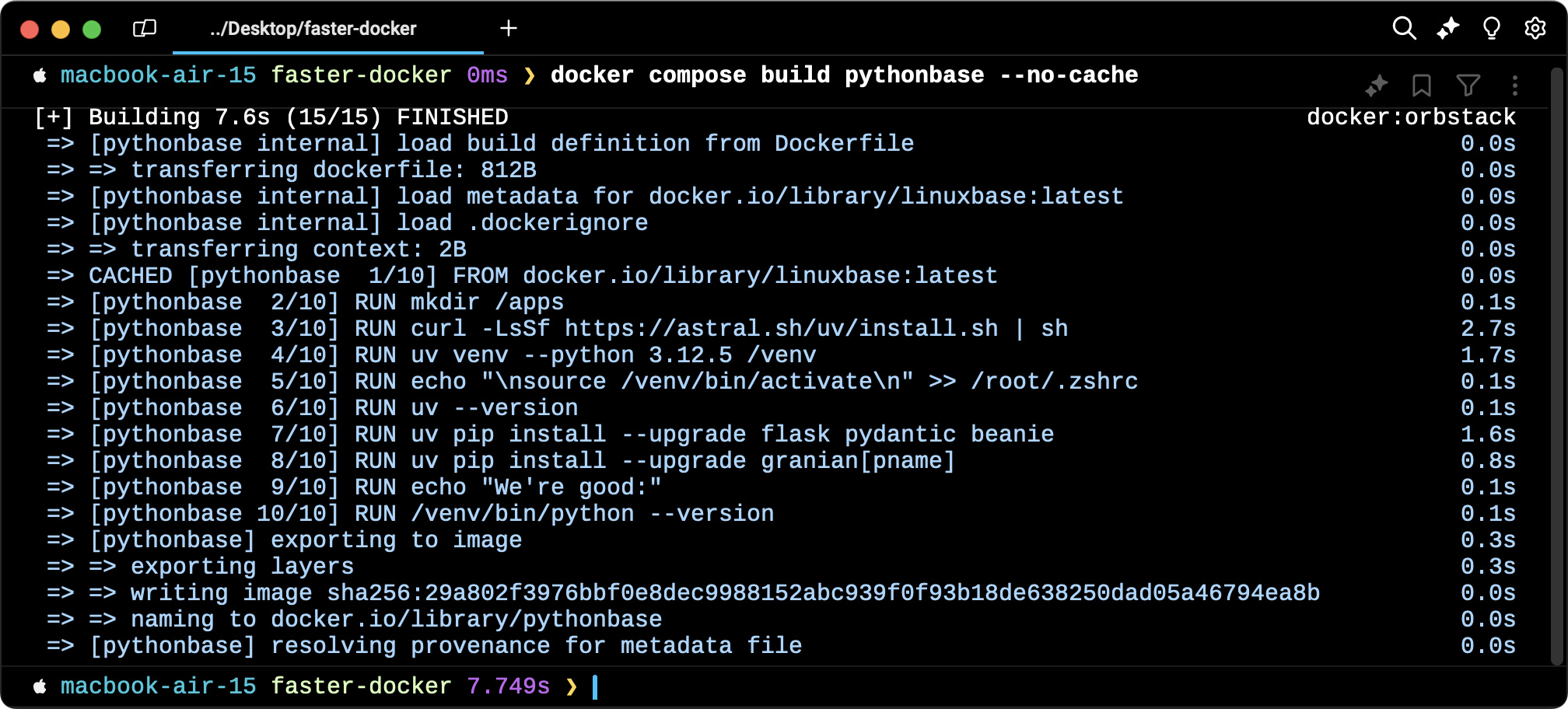

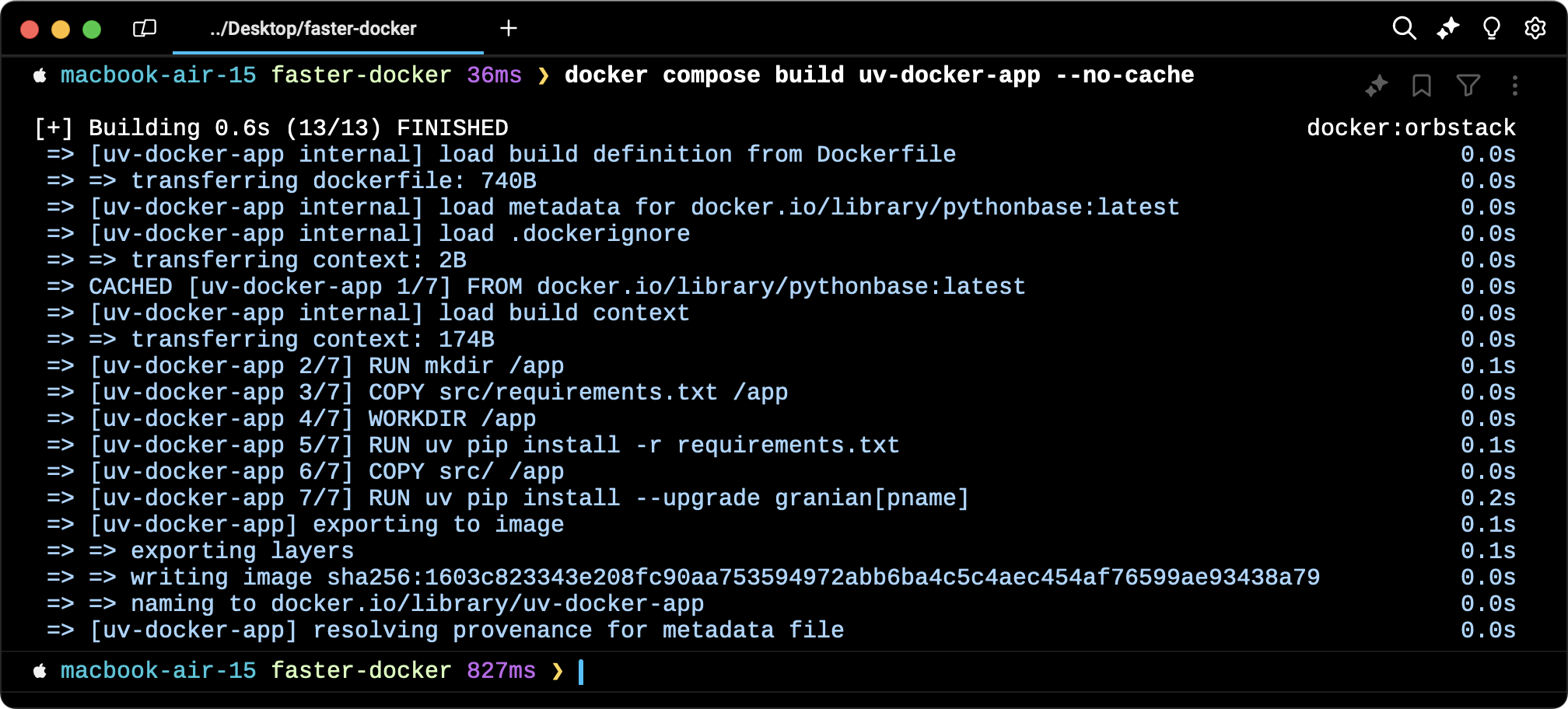

Just unzip it and run docker compose build in the same folder as compose.yaml to build and see how quickly it all comes together. In particular, once the linuxbase is built, just run docker compose build pythonbase --no-cache to see how quickly we get from Ubuntu to Python 3 with uv. Then start the website with docker compose up.

Some screenshots

Python base image installing uv and Python plus some common dependencies in 7 seconds:

Our app’s container with dependencies and set up in 800ms!

It’s already in action

So the next time you listen to a podcast episode or take one of our courses, you can think about this running behind the scenes making our continuous deployment story even better.