TL;DR: The opposite of cloud-native isn’t “lift-and-shift” or building your own data center. It’s stack-native: building your app with just enough full-stack building blocks to run reliably with minimal complexity. We run 28 apps serving 9M requests/month on one $65/mo Hetzner server. Same setup in AWS? $1,226/mo.

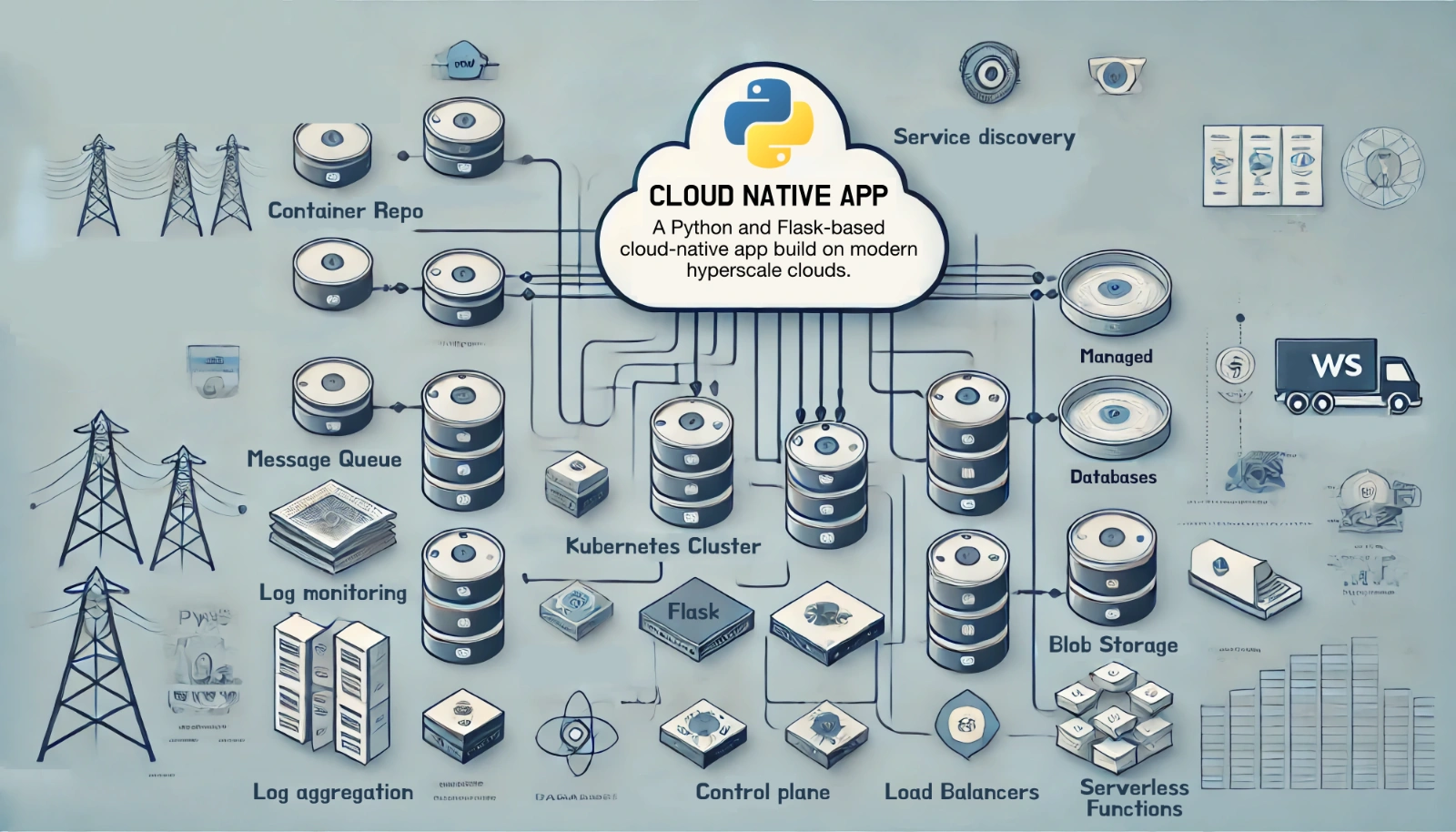

There seems to be a lot of contention lately about whether you should go all-in on the cloud or to keep things as simple as possible. Those on the side of “build for the cloud” often frame this as cloud-native. By that, I believe they mean you should look at every single service offered by your cloud provider when architecting your application and generally the more services you pull together the better. See Microsoft’s and Google’s definitions for cloud native.

“What is the opposite of cloud-native?”

In this essay, I’ll define a new term for the modern opposite of cloud-native. I think you’ll find it appealing.

Those on the cloud side look back at the dark days of running physical servers with monolithic applications directly on hardware at your location with valid skepticism. These apps were often little more than just a single application and a huge local database. Maybe they were even client-server desktop apps (gasp!).

If you’re not up on all the cloud services and managed this or that, you’re not cloud-native. You’d probably do what they call “lifting-and-shifting” your app to cloud. Did you have one huge server in the office? Well, now you get one huge server in AWS EC2 and copy your app to it. You’ll also pay extreme prices for that privilege. You’re really not with the times, are you?

This particular view of a mostly bygone time is not the opposite of cloud-native. The fallacy here is comparing two modes of running applications disjoined across time. Cloud-native is one view of how to build apps in 2024. This lift-and-shift style is a view of how to run your own apps in 2001. It’s not constructive to debate them as peers.

I recently watched a livestream about moving out of AWS. So many people participated in the conversation were contrasting two worlds: Cloud vs. On-prem. Either you run in AWS/Azure or you build a data center, you string ethernet cables, you buy a backup generator, you hire a couple of engineers to keep these running when hard drives fail.

What? No. This is not the alternative.

The livestream conversation was about a company moving into another managed data center. That data center came with generators. It already has ethernet. The tradeoff is not cloud or build your own data center in 2024. Please do not let people drag you into these false dichotomies.

You should be wary of these merchants of complexity. These hyperscale cloud providers want you to buy in fully to all their services. The amount of lock-in this provides is tremendous. Once your bill leaps far beyond what you expected, it’s tough to change course.

The opposite of cloud-native is stack-native

Consider that insane diagram at the top of this essay. You have a little tiny bit in the center labeled Flask. It is surrounded by every cloud-native service you might want. One portion of our app might need to scale a bit so lambda/serverless functions. We broke our single Flask-based API into 100 serverless endpoints, so we now need something to manage their deployment. What about logs and monitoring? Across all these services? We need a few log services for that. And we may need to scale the Flask portion so Kubernetes! And on it goes.

I present to you an alternative philosophy: stack-native.

“Stack-native is building your app with just enough full-stack building blocks to make it run reliably with minimal complexity.”

This is what it looks like when displayed at the same scale as our cloud-native diagram:

It’s a breath of fresh air, isn’t it? But let’s zoom in so you can actually see it!

What do we have in this stack-native diagram?

- We have Flask at the center still.

- Flask might be managed by Docker and potentially scaled via web workers. It could be run directly on the VM if that makes sense.

- Those workers are running in a WSGI Python server, in this case Granian

- The web app is exposed to the internet via nginx

- The self-hosted database can live here too (Postgres or MongoDB)

- We have a server mapped volume for all our database data, container images, and more.

That’s it. And everything in that picture is free and open source other than the cloud VM. It’s running on a single medium-sized server in a modern and affordable cloud host (e.g. Digital Ocean or Hetzner).

Is this lifted-and-shifted? I don’t think so. It’s closer to Kubernetes than to old-timey client-server on-prem actually.

Would you have to buy a generator? Run ethernet? Fix hardware? Of course not, it runs in a state-of-the-art data center with global reach. It’s just not cloud-native. It’s stack-native and that is a good thing.

Is stack-native for toy apps?

No. We have a very similar setup powering Talk Python, the courses, the mobile APIs, and much much more. Across all our apps and services we receive about 9M Python / database backed requests per month (no caching because it’s not needed). And we handle about 10TB of traffic with 1TB straight out of Python.

Here’s the crazy part. All of our infrastructure is running on one medium-sized server in a US-based data center from Hetzner. We have a single 8 CPU / 16 GB RAM server that we partition up across 28 apps and databases using docker. Most of these apps are as simple or simpler than the stack-native diagram above. For this entire setup, including bandwidth, we pay $65/month. That’s $25/mo for the server and another $40 for bandwidth.

I just finished doing some tentative load testing using the amazing Locust.io framework. At its peak, this setup running Nginx + Granian + Python + Pyramid + MongoDB would handle over 100M Python requests / month. For $25.

In contrast, what would this setup cost in AWS? Well, the server is about $205 / month. The bandwidth out of that server is another $100/mo. If we put all our bandwidth through AWS (for example mp3s and videos through S3) the price jumps up by another whopping $921. This brings the total to $1,226/mo.

The contrast is stark. If we chose cloud-native, we’d be tied into cloud-front, EKS, S3, EC2, etc. That’s the way you use the cloud, you noobie. Let’ the company cover the monthly costs.

But stack-native can move. We can run it in Digital Ocean for a few years as we did. When a company like Hetzner opens a data center in the US with 1/6th pricing, we can take our setup and move. The hardest part of this is Let’s Encrypt and DNS. There is nearly zero lock-in.

The final take away

It may sound like I’m telling you to never use Kubernetes. Never use PaaS and so on. That’s not quite it though. You can definitely choose PaaS. Rather than using the cloud provider’s PaaS, maybe host your own version of Coolify? You want to run serverless? Maybe Knative works. Whatever you pick, just be very cognizant of the lock-in and ultimate price in terms of flexibility in the future. Try to fit it into a single box, even if that box is a pretty big VM in the cloud.

Get the whole Python in production series

I plan on writing a whole series on this topic focused on this topic. Please consider subscribing to the RSS feed here or joining the mailing list at Talk Python.

What do you think?

If this article made you think or you just want to share your thoughts, here are a few places with comments you could jump (no comments directly on my blog):