On Wednesday, we launched our Black Friday Sale over at Talk Python Training.

If you know me, you know that I’d much rather just offer a fair price year-round (which I think we do) and avoid hyping these sales. But many many people asked for it. So we’ve done this the past few years and it seems to have been very appreciated by our users.

Generally, our infrastructure has handled Black Friday fine. Yes, it’s busy on the first day for sure, but not melt-your-servers busy.

Until this year.

After sending out the announcement, I fired up glances on our web server just to see how it was going.

The server was at 85% CPU usage … and going up.

88%, 91%, 92%, … Uh oh.

It wasn’t going to be able to handle much more. But there was a surprise: It wasn’t the 8 uWSGI Python worker processes getting pounded. It was nginx. I wish I took a screenshot but here’s what you might have seen:

Process | CPU Usage

----------------------------------------------------------------

nginx worker 1 | *************************************

nginx worker 2 | ****************************************

uWSGI worker 1 | **

uWSGI worker 2 | ****

uWSGI worker 3 | *

uWSGI worker 4 | **

MongoDB | **** (on dedicated server)

...

This was so surprising to me. In case you’re unfamiliar with this deployment topology, here’s what each part is doing:

- nginx (static content)

- Direct web browser connection

- Terminating SSL

- Sending HTML/CSS/Image content to clients

- gzipping relevant content (HTML, CSS, …)

- uWSGI (dynamic Python behaviors)

- The “app” of our Python app

- Running all our Python code

- Talking to MongoDB for DB queries and updates

- Queueing up emails

- Basically everything else

The surprise is that Python handled Black Friday perfectly. It was mostly idling along happy to serve up the requests in the “complicated” data-driven part of our app.

It was nginx just trying to do SSL, gzip, and the rest of static things that was killing the system. And nginx is considered fast! See Why is Nginx so fast?

It is worth pointing out that I have endlessly optimized the Python <–> MongoDB interaction and we have honed the DB indexes to a sharp point. But I still expected that area to be the point of contention.

So next time you get into that debate about why you can’t use Python because it’s not compiled and it has that GIL thing and I think we should use NodeJS or whatever, feel free to refer back to here.

And to wrap things up, thank you to everyone who cared enough to check out our courses even if they almost killed the server! ;)

Reflections and an update

After posting this article and a few conversations on social media, I think I know the cause (but it doesn’t change my surprise and general point of view).

Looking at the most commonly requested Black Friday page, it turns out there are a lot of requests we could offline (with added complexity of course).

On the page training.talkpython.fm/courses/all

- 1 HTML response (via Python)

- 12 CSS requests (many bundled, but some need to be separate like Font Awesome)

- 43 image requests

- 1 JavaScript request

The total size of the request is around 7MB with the HTML only 7.5kB. At the time of this Black Friday onslaught, we were not using a CDN because the content is served plenty fast and juggling dev vs. CDN URLs in production is a pain.

But this whole experience opened my eyes that we could push 56 / 57 requests to a CDN and drastically reduce the amount of content (hence load) we’re putting on nginx.

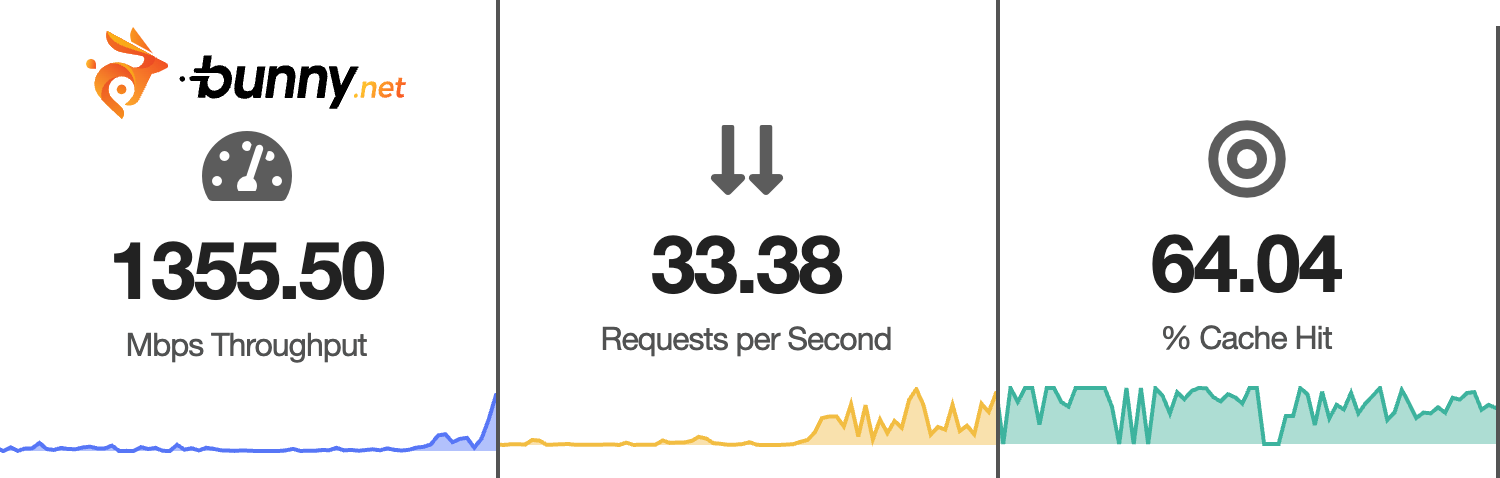

I chose Bunny.net CDN. After just 30 minutes, you can see the load that was put upon our nginx process, now shared across the world (and content closer to the users):

So far, Bunny is fantastic software. Only took about 15 minutes to set up the CDN, a custom domain, and SSL. Then there was the hour of changing all the CSS, JS, and IMG links across Talk Python Training :).

At $0.01 / GB, gaining access to this much distributed infrastructure seems like a great tradeoff. As of this writing, we just passed our first GB of content over the CDN. That’s a penny well-spent.

Another update and Cyber Monday

The fun thing about Black Friday sales is they are generally bookended by Cyber Monday.

Before I said I wish I had taken a screenshot when we sent out the announcement for the opening of Black Friday. It turns out there is roughly the same amount of traffic and interest in the closing day of our sale and this time I did take a screenshot.

With our Bunny.net CDN setup fully in place, things were quite different and our infrastructure was busy but happy. I now see why our nginx server was hurting so much.

Here’s the realtime traffic on the CDN:

1.4 Gb/sec of static files. Glad we moved those requests the edge.

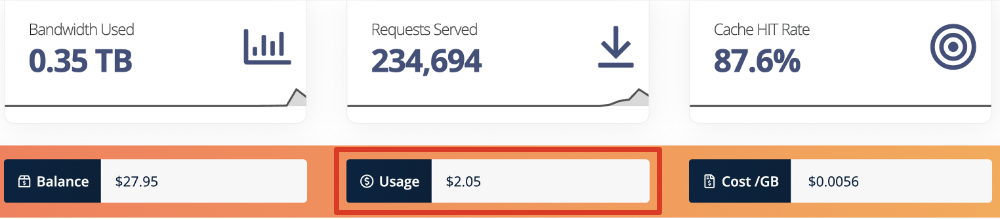

And here is our app server at the exact same moment, the one that nearly melted. Note: Cache misses are generally pulled from other CDN nodes, not this server.

7.5% CPU usage total and only 3% across both nginx workers.

Finally, we all know that with enough complexity and money, you can solve almost any scaling problem. But this was not expensive:

I switched most our traffic over the past 2 days. So far we’ve spent $2 in total.

I hope my sharing our experience here has given you something concrete to consider when scaling your Python apps (or any web app really) for relatively small teams and deployments.